ChatGPT Increasingly Used to Decide Who Loses Their Job

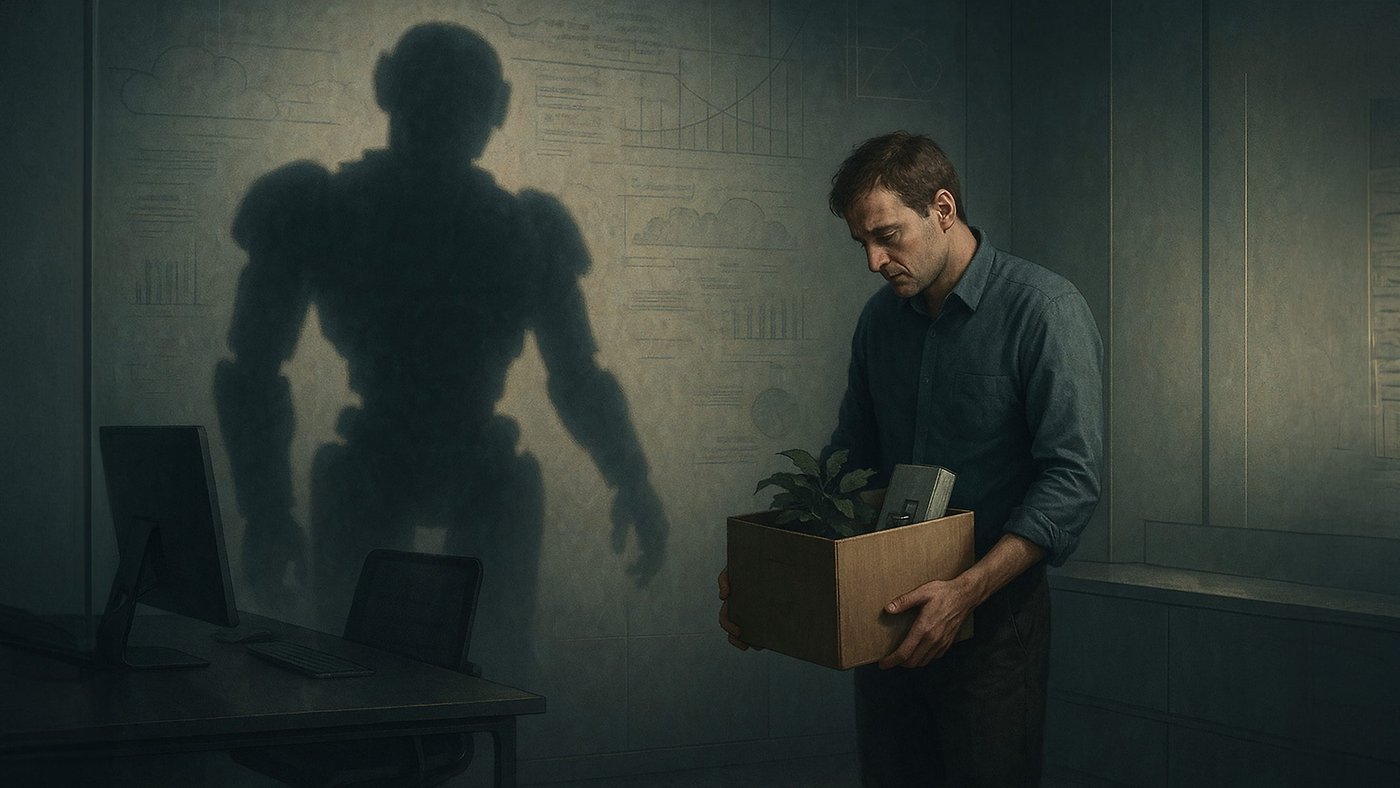

More and more people are worried about losing their jobs to artificial intelligence or robots. The bitter truth is that not only does it often replace human labor — it even decides for itself who will be given the boot more and more!

A survey from the USA is making people sit up and take notice. Over 1,300 HR managers (human resources) were asked by ResumeBuilder (an app/platform for job seekers) about their use of AI at work. The trend is unlikely to please many employees: Chatbots such as ChatGPT are increasingly playing a role in future recruitment decisions.

Thumbs up or thumbs down? This is how ChatGPT decides whether you keep your job

In fact, 78 percent of respondents shared they use LLMs (Large Language Models) to decide whether someone is eligible for a pay rise. The proportion of those who use ChatGPT, Claude, or Gemini to determine who is entitled to a promotion is similarly high — a whopping 77 percent.

A further 66 percent sought advice from artificial intelligence when it comes to dismissals. Almost as many ask ChatGPT and similar AI models on how exactly should they best proceed with these dismissals. This means that AI not only plays a role in the decision itself but also helps shape the wording, perhaps to form a softer landing.

This is where ethical questions arise. In fact, this is even more true for another value: one in five of those surveyed mentioned ChatGPT even makes the final decision on whether someone is fired or not!

AI decides — is this a good or bad idea?

The survey revealed around half of HR decision-makers rely on ChatGPT, followed closely by Microsoft Copilot and Google Gemini. What all these platforms have in common is that they have no personal preferences when it comes to personnel decisions. This sounds positive at first: after all, there are no disadvantages because a manager doesn’t like the employee’s nose — and no undeserved promotions because the person is related to the boss.

So yes, in theory, a decision with the help of AI could be fairer — as long as the data and rules are fair and everything is communicated transparently. Pre-selection of the relevant candidates could be more efficient and objective.

What speaks against AI assistance

However, we must ask ourselves ethical questions and also question the degree of objectivity. Anyone who regularly uses ChatGPT will have noticed that the LLM likes to talk to us. This means that our personal impressions are often reinforced and supported by AI. We also have to take into account a bias that can result from the training and prompts used.

Above all, however, an AI also lacks context: how has a person developed over the years, what role do they play in the team, and how good/bad is the interpersonal fit? These are things that may be underrepresented in the database. It is also possible that an AI decision can help a company to shirk responsibility when it comes to making tough situations for humans. In combination with the feeling that the workforce is becoming dehumanized and interchangeable in a way, this could have a dramatic impact on the working atmosphere.

AI tipping the scales? Not at the moment!

The trend will probably be unstoppable, but must still be observed with a great deal of skepticism. AI can already provide valuable support in the area of human resources, but as things stand, it is anything but a good idea to leave the final decision to ChatGPT.

We live in times in which AI is not (yet) replacing our jobs in large quantities. However, it is already being used as an excuse for redundancies. It is to be feared that the inhibition threshold for such redundancies will further drop if the blame can now also be placed on AI.